_____ Computational Fluid Dynamics _____

CFD Simulations for the Huygens-Cassini Probe

Roy Williams

Caltech CACR

Jochem Hauser, Ralf Winkelmann

Center for Logistics and Expert Systems, Germany

Hans-Georg Paap

Genias GmbH, Regensberg, Germany

Martin Spel, Jean Muylaert,

ESTEC, Noordwijk, Netherlands

In 2004, after a journey of seven years past Venus and Jupiter, the Cassini spacecraft will reach the vicinity of Saturn.

Piggybacked on this NASA vehicle is the European Huygens probe, which willdrop into the atmosphere of Saturn's largest

moon, Titan. During the three-hour descent, delicate instruments will emerge from the bow surface to measure the atmospheric

composition and to take pictures of the surface. Titan is especially interesting because its atmosphere contains complex

hydrocarbons reminiscent of the paleoatmosphere of Earth where life began.

The objective of this simulation is to gauge the effects of any dust that might be suspended in the Titanian atmosphere: an

instrument could be jeopardized if significantly impacted by high-speed particles. We shall use streamlines to determine

whether the instrument lenses get a larger or smaller dust impact rate compared with other parts of the craft. Some initial

results are shown in Figures 1-3, computed on 128 nodes of the Caltech Paragon.

|

|

|

|

Figure 1

|

Figure 2

|

Figure 3

|

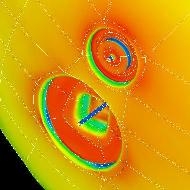

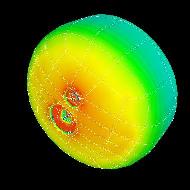

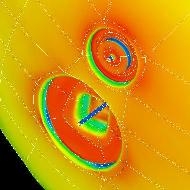

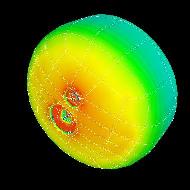

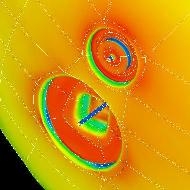

Figures 1-3. The

Huygens-Cassini spacecraft as it enters the nitrogen-methane atmosphere of Saturn's largest moon,

Titan. We see the bow face of the craft. Figures 1-3

show increasing magnification of the delicate instruments that

measure atmospheric properties during the subsonic descent. In this Euler

flow simulation, computed on 128 nodes of the

CSCC Intel Paragon, color represents increasing temperature from blue to red. The white lines in the first two panels are

boundaries of the 462-block 3D grid. In the last panel, the surface grid is shown. A major question is the effect of the impact

of suspended dust on the instruments.

We are using a compressible Navier-Stokes code, Parnss, which is designed to simulate flows in the hypersonic to

subsonic range, with complex geometry and real-gas effects. Parnss has been used to evaluate flow around the Shuttle

and the European Hermes spaceplane, as well as for calibration of hypersonic wind tunnels.

Parnss uses a multiblock grid that may, for a complex geometry, have hundreds of blocks, each of which may have a

1,000,000-to-one thickness-to-width ratio in the grid in which boundary layers must be resolved. We may wish the solver to

converge to a steady state, or the solver may be run in the time-accurate mode.

The solver approaches the steady state in three stages: first an explicit scheme for efficient treatment of transients, followed by a

block-implicit scheme for faster convergence of the shock position, with a final Newton solver to converge quadratically to the

steady state. We are evaluating criteria for the automatic transition between these phases, to obtain maximum computational

efficiency with minimum human interaction. For time-accurate flow modeling, the objective is to see a time history of the flow,

rather than just a single steady state, so that I/O, storage, and visualization subsystems are the primary bottlenecks on the

computation.

With Ronald D. Henderson of Caltech, we have recently implemented a new output path to enable real-time visualization of the

behavior of the solver. Each processor of the Paragon outputs its data to a HIPPI node, where it is sent through the Caltech

HIPPI fabric to a Silicon Graphics workstation that is displaying the current flow, using the visualization code Visual3 from

MIT. Only a small part--presumably the interesting part--of the entire simulation data need be sent to the Visual3 server. This

output path reduces the need for storage, transfer, and postprocessing of large data files, with the beneficial side-effect of

making it much easier to apprehend the behavior of the simulation, whether it is the convergence history for the steady-state

case or the flow itself in the time-accurate case.

Last Modified: March 4, 1996 (jlj)

Available through: Annual Report 1995 (page 56-57), Concurrent Supercomputing

Consortium, California Institute of Technology, USA and

http://www.cacr.caltech.edu/publications/annreps/annrep95/cfd1.html